“The Interactive Room” is a groundbreaking project that was developed by ZAKA’s talented AI Certification students, Abbas Naim and Razan Dakak, in collaboration with the AI Development team at ZAKA “MadeBy“. The AI team is focused on providing cutting-edge AI solutions to our clients, and this initiative showcases our capabilities in integrating state-of-the-art AI models to create innovative, personalized experiences. Stay tuned as we continue to push the boundaries of AI in innovation, education, and beyond

Imagine being able to place yourself in iconic movie scenes, swapping faces with your favorite celebrities, and even syncing your voice with their lines. Sounds like a dream? Well, it’s now a reality with the Interactive Room project. By combining advanced technologies like gender classification, deepfake face swapping, and voice synthesis, we have created a platform that lets users experience movies in a way never imagined before.

This blog takes you through the technical development of the project, the challenges we faced, and the solutions we implemented to bring this unique experience to life.

With the growing demand for personalized content and immersive experiences, many projects have tried to blend users into digital content. However, most solutions face three key challenges:

Previous attempts using older deepfake models and generic text-to-speech (TTS) systems either lacked quality or struggled with real-time processing. Our approach leverages state-of-the-art AI models to address these challenges and create a seamless experience for users.

The first step in the Interactive Room pipeline is to detect the user’s gender based on the uploaded image. This classification step helps tailor the face-swapping process by optimizing for gender-specific features.

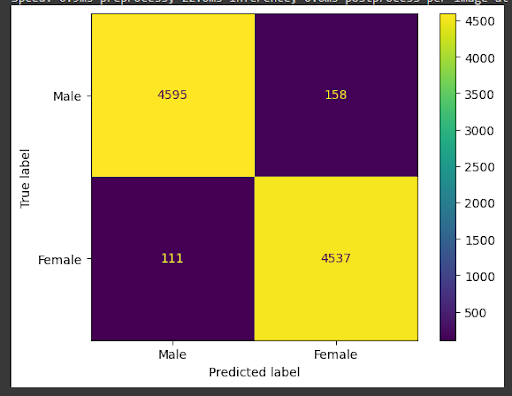

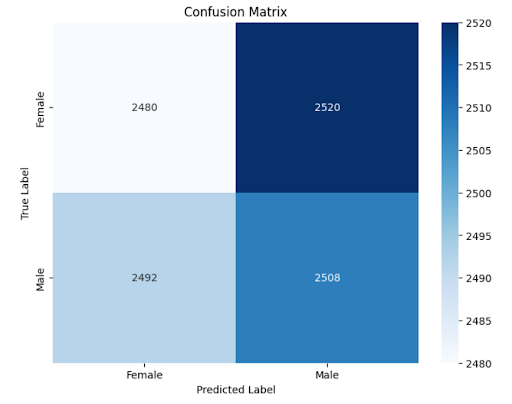

Below is the confusion matrix compared between VGG16 and Yolov10s. The first one is for the Yolo model.

Once the gender is classified, the image, along with the chosen movie or TV scene, is passed through the pipeline to perform deepfake face swapping.

As a comparison between Roop and SimSwap, we can see the following result of the original scene below:

The image below shows how one face is swapped in place of the famous actress scene Maguy Bou Ghoson in Julia Series.

The final step of the pipeline is voice synthesis, where we provide two options: users can either record their voice or use the extracted original audio from the scene. For the recorded voice, we use a text-to-speech (TTS) model to convert the user’s input into a voice that blends with the scene’s original audio.

Once the voice is synthesized, it can be blended into the original audio from the video, completing the scene substitution.

To create a seamless pipeline for users, we integrated the following components:

The results of this project, with voice cloning can be seen here for both genders.

For the user interface, we chose Gradio to provide an easy-to-use platform where users can upload their images, select scenes, and record their voices. Gradio allowed us to focus on building an interactive experience without spending too much time on frontend development.

As a short way to try our project, you can clone the GitHub repository and follow the guidance to set up everything and run the Gradio interface.

GitHub Repository: Interactive Room

The success of the Interactive Room project showcases how AI technologies can revolutionize the entertainment industry. Here’s how our results can have a real-world impact:

Throughout the development of the Interactive Room project, we faced several challenges, particularly in managing computational resources:

While the Interactive Room project has achieved great results, there is still room for improvement:

The Interactive Room project showcases the integration of three advanced technologies: gender classification, deepfake face swapping, and voice synthesis. By employing YOLOv10 for gender detection, SimSwap for face swapping, and Coqui xTTS for voice conversion, we were able to create a system that allows users to step into their favorite movie or TV scenes.

Through this project, we learned to navigate the challenges of training deep learning models within resource constraints, optimizing the pipeline for efficiency without sacrificing quality. Stay tuned for updates as we add images, demo videos, and further refine the system for a broader audience.