Neural Networks are the backbone of AI, particularly in deep learning, in the current age of AI. These networks mimic the human brain making Neural Networks a bundle of nodes linked across several layers to learn to interpret data properly, gradually improving with each layer. However, Graph Neural Networks (GNNs) are underrated despite what a big role it can play in the AI field. Each entity captures information propagating between the layers, producing a better understanding of the data’s complexities for accurate interpretation.

Now, one might ponder “If I barely understand my life let alone my brain, how am I going to understand this system🙄?” Don’t worry—this journey will guide you through the fascinating world of GNNs, step by step👣.

Knowing how important GNNs are, one should initially ask: How did they come to be? What are their foundational principles?

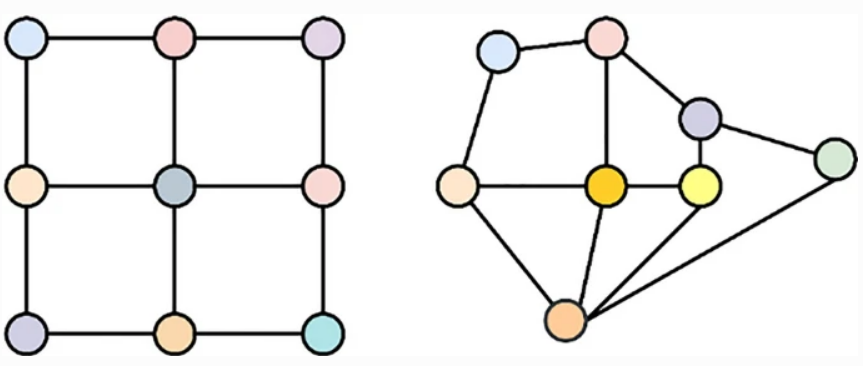

GNNs were heavily influenced from their more famous counterparts, Convolutional Neural Networks (CNNs). While CNNs are designed to work with structured data, like images arranged in a grid, GNNs address a different challenge: unstructured data, such as social networks or molecular structures, where relationships are not arranged in a grid. GNNs use adaptive connections to process complex, irregular data, allowing nodes to exchange information and update their states based on their neighbors. This approach helps GNNs capture intricate patterns in data with flexible structures.

Here is a display of CNNs vs GNNs structures:

Simple so far, right? Now let’s take it to another level!

How do GNNs work🤩?

Let us start with G = (V,E) and a history lesson. The graph is set to be made up of two essential parts: V standing for vertices (aka nodes) and E for edges (links). And it is worth mentioning that the graph Laplacian matrix was crucial in developing Graph Neural Networks (GNNs).

When feeding a graph G into a GNN, each node vi is linked with a feature vector xi, and optionally, edges may have feature vectors x(i,j).

The GNN processes these inputs to compute hidden representations hi for nodes and h(i,j) for edges.

GNNs update these representations through a process called message passing:

These updates are iterative, allowing nodes to refine their representations through multiple rounds of message passing. GNNs like the Graph Convolutional Network (GCN) generalize and combine previous models by using shared parameters in fedge and fnode.

This approach extends principles from recurrent neural networks to graph data.

And yes, equations can look intimidating, but remember, even Allen Iverson had his own way of saying, “Now we talking about equations…? Really, equations?” Keep calm and crunch the numbers!

To effectively use Graph Neural Networks (GNNs), it’s crucial to understand their reliance on message passing, where nodes update their representations based on neighboring nodes. Multi-Layer Perceptrons (MLPs) are often employed to refine these data sets. However, several challenges persist:

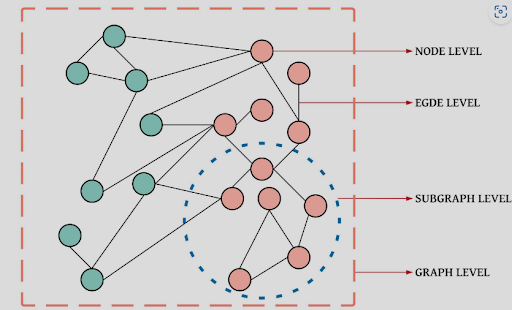

Graph-based tasks vary depending on their focus and can be divided into three main categories:

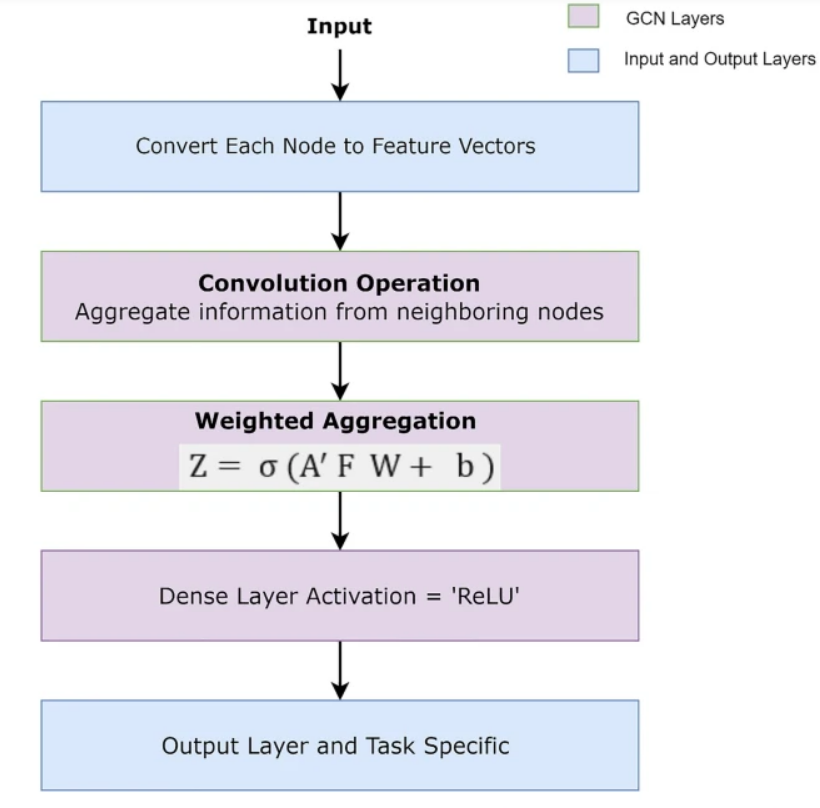

Inhale and exhale young padawans for the force is strong with you. Graph Convolutional Networks (GCNs) are an evolution of graph neural networks that utilize the Laplacian graph. They operate by having neighboring nodes aggregate data through a weighted sum of their feature vectors. The weights are distributed according to the normalized adjacency matrix, resulting in new vectors for each node.

This process is captured in the equation Z = σ(A’FW + b),

where:

This setup prepares the model for application of a non-linear activation function like ReLU.

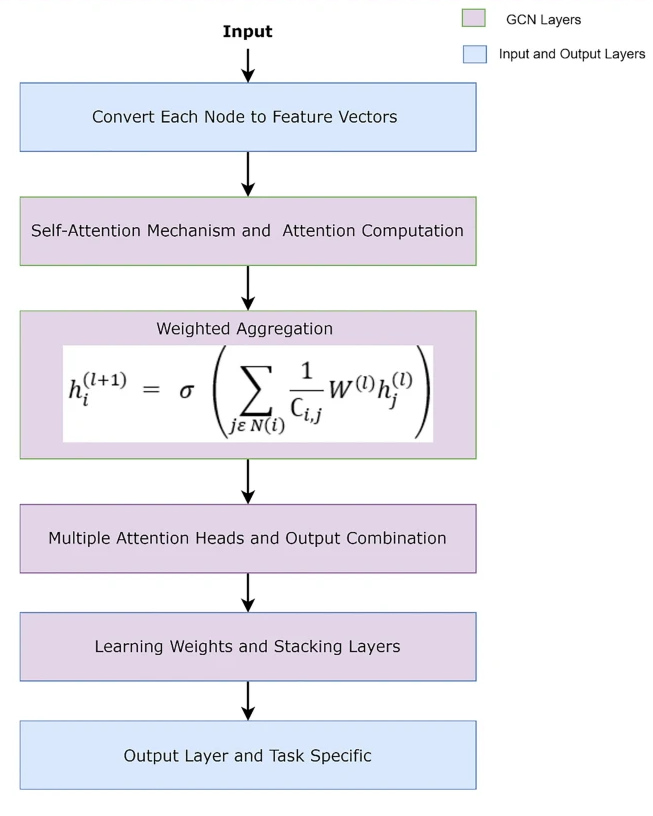

GATs make GCNs look like they’re playing with training wheels!

With the evolution of GNNs, many variants have emerged, each adding its unique twist:

Graph Neural Networks (GNNs) are powerful tools for handling graph-structured data, allowing them to capture complex relationships and dependencies within datasets. Initially inspired by simpler concepts, GNNs have evolved to tackle a wide range of tasks, including node classification, link prediction, and graph-level prediction. Their versatility is evident in applications such as social network analysis, recommendation systems, and drug discovery.

So, while GNNs might not wear capes, they sure do a lot of heavy lifting🏋️ —and make data analysis look pretty cool.