Imagine an AI assistant that doesn’t just speak eloquently but also knows where to look for the latest facts, can connect the dots between complex ideas, and explain how it arrived at its answers.

That’s exactly what Retrieval-Augmented Generation (RAG) combined with Knowledge Graphs (KGs) promises: the fluency of advanced language models married to the precision and structure of graph-based knowledge.

In this post, we’ll journey from the foundations of RAG, dive into the power of KGs, and explore how their integration paves the way for AI that’s not only informative but trustworthy, explainable, and ever-evolving.

LLMs like GPT-4 have redefined what machines can write, but they’re not perfect:

RAG solves these problems by weaving in real-time retrieval: when you ask a question, the system fetches relevant snippets from an external corpus (say, a news database or scientific archive) and feeds them back into the model. Suddenly, your AI assistant can ground its words in up-to-date evidence, boosting accuracy and credibility.

Key Components of RAG

1. Retriever grabs the most pertinent documents using:

2. Fusion Strategy defines how to blend retrievals and queries:

While RAG supercharges LLMs with fresh data, it still treats information as unstructured text—think pages of prose rather than nodes and relationships. That introduces challenges:

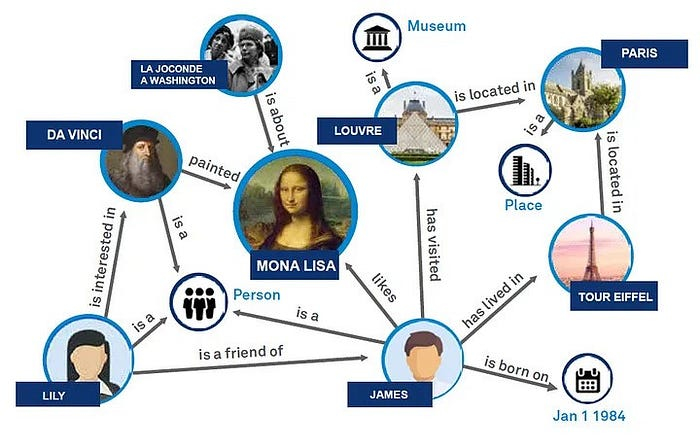

Knowledge Graphs: structured maps of entities (nodes) and their relationships (edges), enriched with properties. This mirrors how we humans think—entities linked by meaning.

Core KG Concepts

Why KGs Matter for AI

Imagine combining RAG’s freshness with KG’s structure. Here’s how Graph RAG elevates AI:

1. Building the Graph: Collate data from text, databases, or CSVs. Use NLP (NER named entity recognition, relation extraction) to populate nodes and edges. Store in graph databases like Neo4j or Memgraph.

2. Turning Graphs into Vectors: Convert nodes and relationships via:

3. Graph-Based Retrieval: When a query arrives, it’s converted into an embedding and matched against the graph’s embeddings using fast ANN methods like HNSW or FAISS. Advanced query reformulation leverages graph paths to include semantically related entities.

4. Dynamic Prompt Assembly: Retrieved subgraphs are assembled into the LLM prompt via:

5. Grounded Generation: The LLM synthesizes a response anchored in graph facts, ensuring accuracy and offering a breadcrumb trail of reasoning paths.

Healthcare: Graph RAG systems can traverse patient data, drug interactions, and research literature to suggest personalized treatment plans—explaining each recommendation by highlighting the graph paths of related trials and outcomes.

المالية: From fraud detection to risk analysis, KGs map transactions, entities, and regulatory rules. Graph RAG can answer compliance questions with pinpoint citations back to specific nodes (e.g., regulatory clauses).

E-Commerce: Product and user graphs enable nuanced recommendations. Ask, “What laptop fits my design workflow under $1,500?” and Graph RAG not only lists options but shows the relationship between specs, user reviews, and price tiers.

Challenges Ahead

1. Define Your Schema: Collaborate with domain experts to map out key entities and relationships. Aim for modular, reusable ontologies (OWL, RDF).

2. Populate and Validate: Extract entities/relations from text, link to existing nodes, and continuously refine with feedback loops.

3. Choose Embedding Techniques: Start simple (Node2Vec) and iterate toward GNNs as your use case demands deeper reasoning.

4. Optimize Retrieval: Leverage ANN indexes and query reformulation to balance speed and relevance.

5. Engineered Prompts: Experiment with pre-, in-context, and post-injection to find the sweet spot for your domain.

6. Monitor and Refine: Track usage patterns, errors, and user feedback to evolve both your graph and generation strategies.

In 2025, AI’s next frontier is not just smarter text generation—it’s turning words into structured, traceable knowledge. Graph RAG stands at this intersection, blending the nuance of language with the rigor of graphs. As you embark on your own Graph RAG journey, remember: success lies in the synergy between crisp schema design, robust retrieval, and artful prompt engineering. The result? AI that doesn’t just answer it reasons and explains, just like us.