“Real-Time Voice Imitation” is an exciting project developed by ZAKA’s talented AI Certification students, Batool Kassem and Daniella Wahab, in collaboration with the AI Development team at ZAKA “MadeBy“. This innovative project demonstrates our team’s expertise in integrating advanced AI models to deliver unique, real-time voice imitation experiences. Stay tuned for more groundbreaking work in AI-driven creativity and beyond! 🚀

Ever imagined having a personal assistant with the voice of Beyoncé? Or perhaps you’d prefer Shakira? What once seemed like a dream—chatting with your favorite celebrity—can now become a reality, thanks to advanced voice cloning models. These models are not only powerful but also surprisingly easy to use. The best part? It all happens in real-time!

In this blog, we’ll guide you through the process of creating your own voice cloning model, allowing you to replicate any celebrity’s voice. We’ll also share insights into the methods we used to build the simplest, yet most powerful, voice cloning models available today.

Many existing voice cloning solutions don’t work well for Arabic speakers. Most models are trained on English data, making them less effective for our needs. Our approach is different. We focus on three main goals: fine-tuning for Arabic, achieving real-time voice conversion, and making sure the output sounds great. By collecting our own data and using open-source models, we aim to fill this gap in voice cloning technology.

These goals guided our search for models that could meet our specific needs for effective voice cloning.

We explored several pre-trained open-source models as starting points. To evaluate our options, we documented each model in detail and compared their performance. This allowed us to decide which ones to continue testing and training.

RVC (Retrieval-based Voice Conversion)

We initially chose RVC, which uses two models: HuBERT for feature extraction and net_g for generating audio.

Moving on to the internal structure of net_g, it comprises several components:

These components work in sequence to transform the encoded audio features into a PCM audio format, effectively changing the voice characteristics while maintaining the original pitch and rhythm.

RVC can create high-quality voice clones, especially with sufficient training data from the target voice, and it can adapt to different voices for output. Also this model is designed to work in real-time with very little delay. It quickly processes the user’s voice and converts it to sound like the target celebrity almost instantly. This makes it ideal for live applications, like a web interface where users interact in real-time.

You can learn more about RVC from the RVC repo.

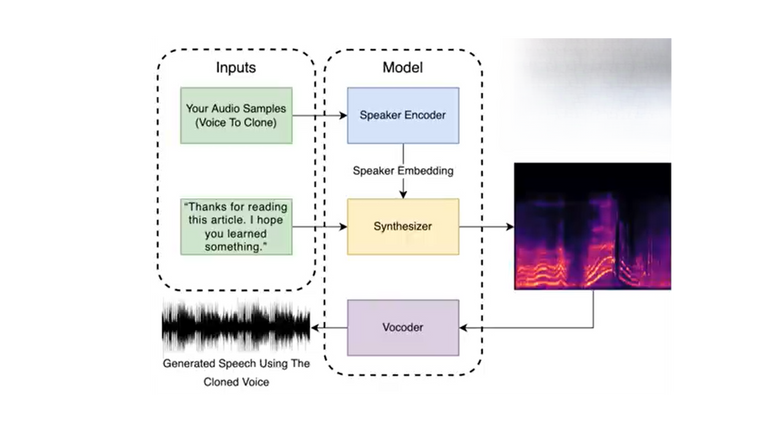

RTVC & SV2TTS

RTVC is designed for real-time voice cloning, which means it can instantly clone a person’s voice using just a short audio sample. It uses zero-shot learning, meaning it doesn’t need to be retrained for every new voice. This feature makes RTVC highly adaptable and quick to use, even with limited data.

Advantages of the RTVC Model:

Three Main Components:

SV2TTS adds speaker verification, ensuring the cloned voice closely matches the original. It works similarly to RTVC but with added checks.

Challenges with Arabic:RTVC is hard to train for Arabic because there’s limited, high-quality Arabic data available. Each part (encoder, synthesizer, vocoder) needs specific data, which makes Arabic adaptation difficult.

Decision to Switch: Despite RTVC’s strengths in real-time cloning, the lack of Arabic training data led us to explore other models like Coqui XTTSv2, which supports Arabic natively. While RTVC remains a powerful tool for English voice cloning, its limitations in Arabic made us look for alternatives that better suit our needs.

Note: SV2TTS is an improved version of RTVC that adds extra checks to make sure the cloned voice sounds closer to the original speaker. Since both models work similarly, we treated them as one approach.

You can check the RTVC project here: Real-Time Voice Cloning

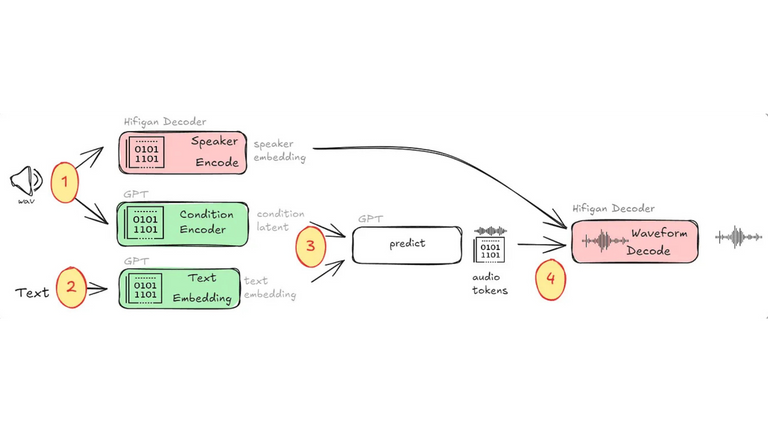

Coqui-XTTSv2:

As an alternative to RTVC, we found that Coqui-XTTSv2 was a perfect fit, especially because it can clone a voice from a short audio sample. Like RTVC, it is a text-to-speech model, meaning we provide text as input, and it plays that text in the voice of the given audio sample.

Coqui-XTTSv2 is a versatile TTS model that supports the Arabic language and can work in real-time with a GPU, though it also runs on a CPU at a slower speed. A key feature is its streaming capability, which allows for near real-time performance by generating audio snippets as text chunks are processed. XTTSv2 excels in voice cloning, requiring only a short audio clip to replicate a voice, and supports 16 languages, including Arabic. Using a Perceiver model, it captures speaker details more effectively than models like Tortoise, ensuring a more consistent voice output. Additionally, it offers emotion and style transfer, enabling the cloned voice to mimic the expressiveness of the original audio.

Brief architecture:

More about Coqui-XTTSv2: Coqui Documentation

Data for RVC:

RVC is a model that uses the target voice for training. For this, we decided to collect celebrity voices primarily from YouTube videos and podcasts. We ensured the data was clean by removing any background noise using online vocal removal tools, resulting in clean celebrity voice recordings in WAV format.

RVC doesn’t require a large amount of data to perform well, but it’s important to include a variety of voice states (e.g., different tones, emotions, or contexts) to capture as many vocal features as possible. We found that around 18 minutes of clean, diverse voice samples were enough to build a decent model.

Note: It’s recommended to split the audio into smaller snippets to help the model capture finer details during training. In our case, we split the audio into 10-second snippets. We used a tool called Audacity, which makes the splitting process simple and efficient.

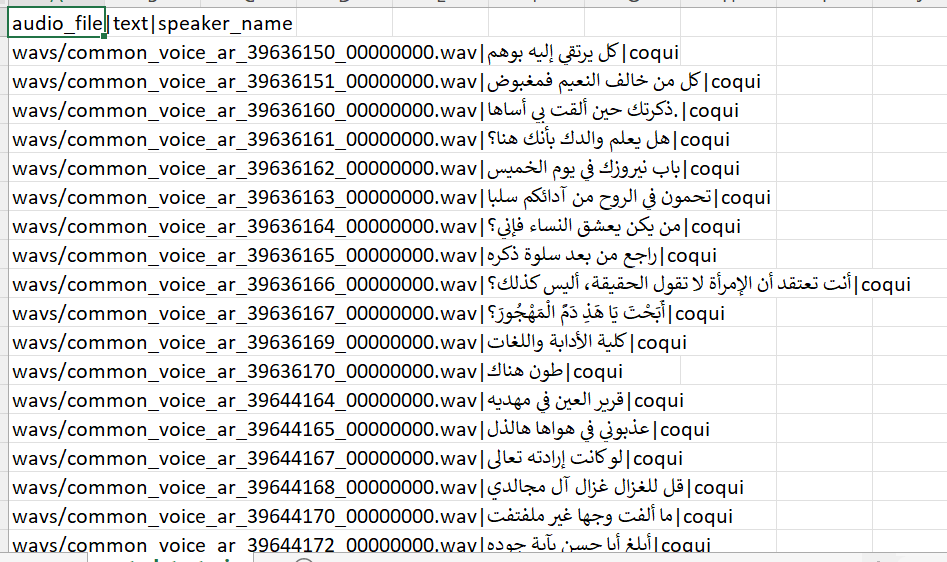

Data for coqui-XTTS:

We trained Coqui-XTTSv2 on multiple Arabic speakers, using a dataset of 24 different voices with a total of one hour of recorded audio. We avoided celebrity voices to keep the model’s zero-shot functionality intact.

One challenge we encountered was that Coqui-XTTSv2 is a text-to-speech model, meaning the training data must be in LJspeech format, which includes transcriptions of the spoken audio. To meet this requirement, we used Fast Whisper, a model capable of generating text from audio. After obtaining the transcriptions, we utilized a snippet of code to structure the data in LJspeech format, organizing it into two CSV files: one for training and one for evaluation.

The code used to format the data is available here: LJspeech Formatter Code

1.Training for RVC:

During RVC training, we adjusted several parameters that affected the final model. One key parameter was the model architecture, where we chose between RVC v1 and RVC v2. The main difference is in the HuBERT feature vector’s dimensionality: v1 has 256 dimensions, while v2 has 756 dimensions. The higher dimensionality in v2 captures more detailed voice features, leading to better voice conversion.

Another important parameter was the target_sample_rate. A higher sample rate captures more detail, but it also demands more from your machine’s processing capabilities. Balancing performance and machine resources was critical here.

Note: It’s recommended to train the model for at least 200 epochs, depending on the size and quality of your dataset, to achieve optimal results.

2.Training for coqui-XTTS:

The training for Coqui-XTTS was slightly different. Instead of focusing on a specific voice, we fine-tuned the model on Arabic speech, which required fewer epochs to avoid overfitting. We completed training in just 6 epochs.

Output Quality: The model works well, but its output depends on the quality of the training data. During testing, we found that while the voice was clear, some Arabic pronunciations could be improved.

Real-Time Use: Although Coqui-XTTS aims to generate speech quickly, it still needs some work to reduce delays. We are looking into ways to make the process faster for better user experience.

It’s important to note that these two models are not directly comparable at this stage. The RVC model sounds better, mainly because it is trained on the target voice itself. In contrast, the Coqui model is fine-tuned for the Arabic language and may sound more robotic due to the difference in training data.

Deployment Phase

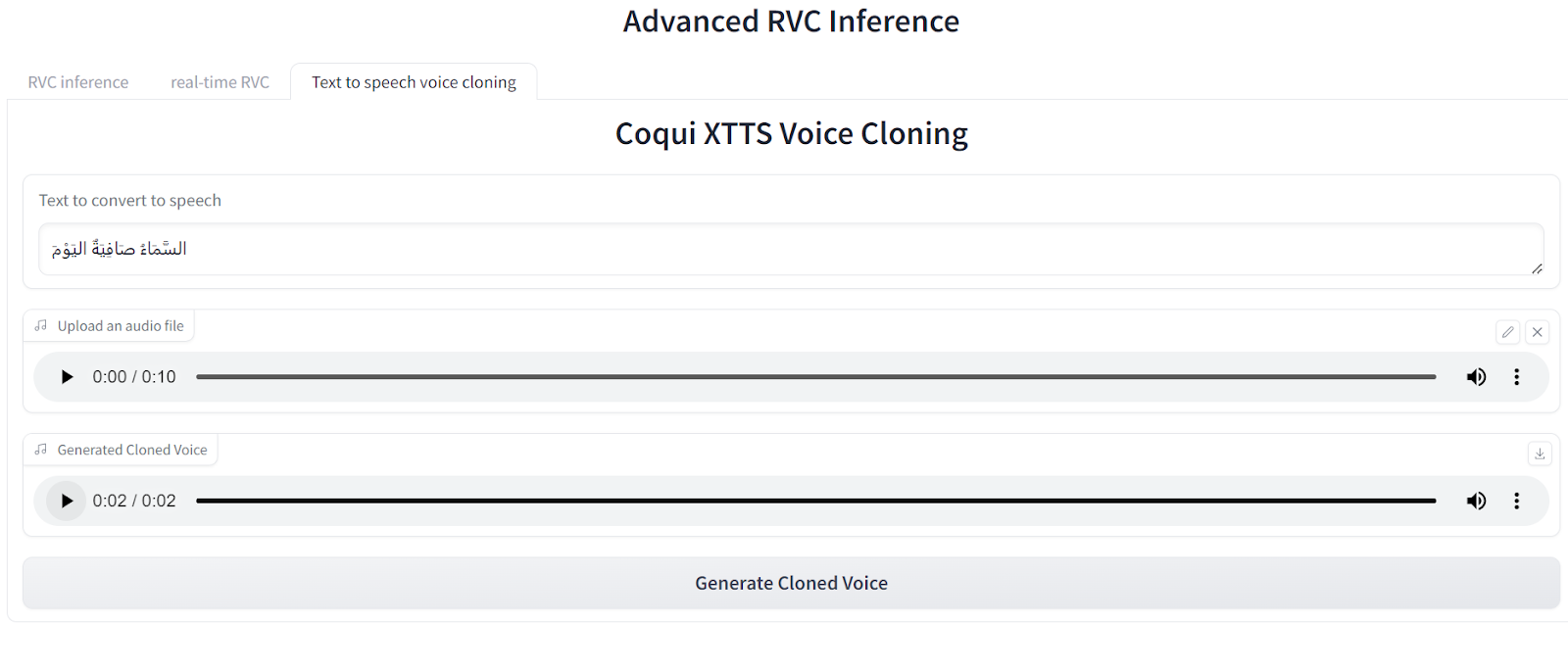

In our deployment, we used the Gradio interface for its simplicity and user-friendliness, making it suitable for real-time interaction. We divided our interface into tabs, each with different functionalities. The first tab is for RVC inference, the second tab allows users to upload their trained RVC model using the W-okada software and test it in real time, and the last tab is for Coqui XTTS model inference.

Once the web UI is launched, to infer RVC specify the model and input the audio file , then press the conversion button. Then you choose one of the pitch extraction algorithms according to your preference, you can use them as follows::

PM: Best for speed. Use it when you need fast processing and real-time performance but can compromise a bit on accuracy.

Crepe:Best for high accuracy. Use this when you want the most accurate pitch detection, even if the audio is noisy or complex, but don’t mind slower performance.

RMVPE:Best for real-time applications. Use this when you need a good balance between speed and accuracy, especially for real-time voice processing.

Harvest:Best for smooth, natural-sounding output. Use this when you want high-quality and natural pitch results, even if it takes a bit longer to process.

Below is a demo of the second tab functionalities, where we showcase the real time RVC capabilities :

And lastly the coquiXTTS tab where you upload an audio to replicate it with your desired text.

You can access the Github repo for this project here.

In this project, we explored voice cloning using RVC and Coqui XTTS models. While we achieved promising results, there are areas for improvement:

Overall, this project highlighted the importance of quality data and model optimization for effective voice cloning. There’s plenty of room to improve our models for better user experiences in the future.