LIA: The Multilingual 3D Avatar is an innovative project developed by ZAKA’s talented AI Certification students, Sary Mallak and Albertino Kreiker, in collaboration with the AI Development team at ZAKA “MadeBy“, showcasing our expertise in real-time multilingual interaction. This project redefines what is possible with virtual assistants. With its ability to seamlessly handle Arabic, English, and French, LIA is not just a technological marvel but a practical tool poised to transform industries like customer service, education, and tourism. Stay tuned as we continue to push the boundaries of AI innovation! 🚀

Imagine a digital avatar that speaks to you in your native language. In customer service, this avatar could handle inquiries in multiple languages, delivering responses that feel truly personal. In education, it could serve as a virtual tutor, effortlessly switching languages to reach a broader audience.

Our team set out to make this a reality by developing a 3D avatar capable of engaging in real-time multilingual communication, with seamless speech-to-text, text generation, and lip synchronization across languages. By harnessing advanced language models and speech synthesis tools, this project aims to break down language barriers in real-time and enhance user experiences across industries.

Our main challenge was designing a 3D avatar with real-time speech recognition, text generation, and synchronized lip movements in Arabic, English, and French. Traditional speech-to-text and text-to-speech systems focus on individual languages, making it difficult to build a fluid, multilingual experience.

Arabic, in particular, introduced complexities due to its unique script and linguistic structure, requiring highly accurate transcription and lip sync. To create a distinct, recognizable voice for the avatar without cloning techniques, we customized a text-to-speech model to bring the avatar’s persona to life. Finally, we needed to optimize every part of the workflow to ensure low latency for responsive interactions, even with complex language models running simultaneously.

In developing a multilingual 3D avatar capable of real-time speech interaction, we relied on several advanced technologies, each selected for its capabilities in speech processing, text generation, and animation. Here is an overview of these tools and the strengths they bring to such a project.

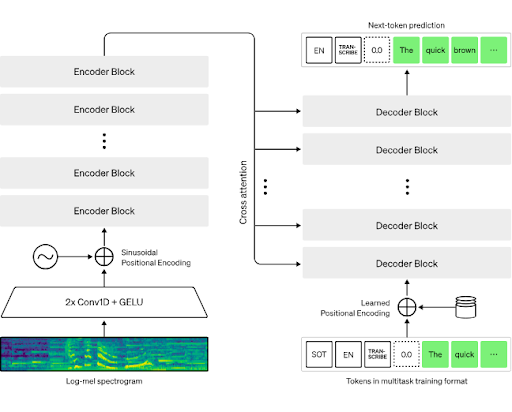

Whisper is an automatic speech recognition (ASR) model developed by OpenAI, known for its robust multilingual capabilities. Trained on a wide range of languages and dialects, Whisper’s architecture features an encoder-decoder design with attention mechanisms that allow it to handle diverse linguistic inputs with high accuracy.

LLaMA (Large Language Model Meta AI) is a transformer-based language model designed to generate human-like text responses. Created by Meta AI, LLaMA’s large-scale training across various topics makes it effective in understanding and generating coherent, contextually accurate responses.

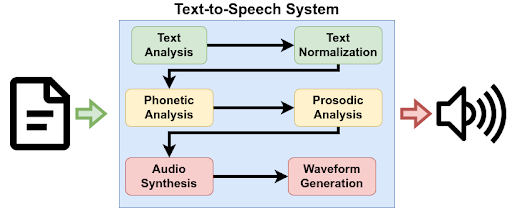

Google Text-to-Speech (gTTS) is an API that provides high-quality voice synthesis in multiple languages. It leverages Google’s cloud-based TTS capabilities, making it a widely used solution for applications requiring real-time voice output.

Rhubarb Lip Sync is an open-source tool for creating lip-sync animations from audio files. It is commonly used to enhance animated characters with synchronized mouth movements based on spoken content.

To enable efficient data handling and real-time interaction, a combination of backend technologies and deployment tools were employed:

To enable real-time language understanding, we started with Wav2Vec2 which is a self-supervised speech recognition model developed by Meta AI. It is able to learn speech representations directly from raw audio. Wav2Vec2 achieved a word error rate (WER) of 25.81%. To enhance accuracy across Arabic, English, and French, we upgraded to the Whisper model, which lowered the WER to 15.1%. Whisper’s multilingual capabilities and automatic language detection streamlined the user experience by eliminating the need for manual language selection.

For text generation, we initially used LLaMA 3.1, fine-tuning it with datasets such as Arabica_QA and TyDi QA, as well as custom datasets related to Lebanese culture. When LLaMA 3.2 launched, we transitioned to this updated model for better native Arabic support. Our custom datasets ensured culturally accurate responses, especially when handling Arabic input, while retrieval-augmented generation (RAG) added depth to answers by pulling in relevant information.

After testing several models, we chose Google Text-to-Speech (gTTS) for its fast, resource-efficient performance. This allowed us to meet real-time demands, an essential component for the fluid, interactive experience with our avatar. Our custom avatar, named LIA (Lebanese Information Assistant), uses a female voice from gTTS to create a friendly, welcoming persona.

Step 4: Lip Synchronization

We integrated Rhubarb to synchronize lip movements with speech. Rhubarb translates audio into phonemes, mapping them to specific mouth shapes (visemes) for realistic, real-time lip syncing. By pairing these animations with LIA’s spoken output, we created an immersive, human-like interaction for users.

Our final implementation met key project goals in real-time multilingual interaction, delivering marked improvements across speech recognition, text generation, and lip synchronization.

Below are the final results showcasing the project

This project demonstrates the potential of real-time, multilingual avatars across various fields. Our avatar, LIA, could serve as a virtual assistant in customer service, a multilingual tutor in educational settings, or an interactive guide in tourism. The system’s adaptability enables it to handle personalized interactions across Arabic, English, and French with natural language responses and accurate lip sync. With future enhancements, LIA could be deployed on more powerful GPUs, reducing latency further and allowing for broader applications in high-demand environments.